CU Denver Gait Dataset

About the Dataset:

Hardware Specifications:

The dataset was captured using the Microsoft Kinect V2 camera, and the Microsoft Kinect SDK 2.0.

Data Specifications:

All data was captured under IRB Protocol Number: 18-2563

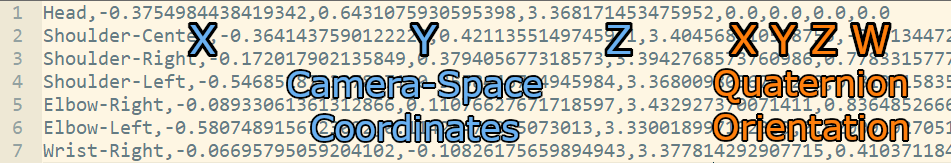

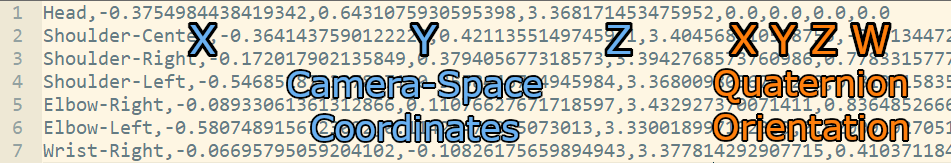

Data was captured at 30 frames per second. We captured both the joint positions (X,Y,Z) and rotations (X,Y,Z,W) provided by the Kinect SDK for 20 joints, and recorded them in a text file format. More information about the text file format can be found in the Data Format section.

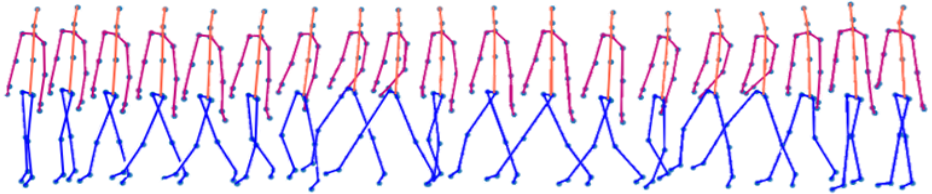

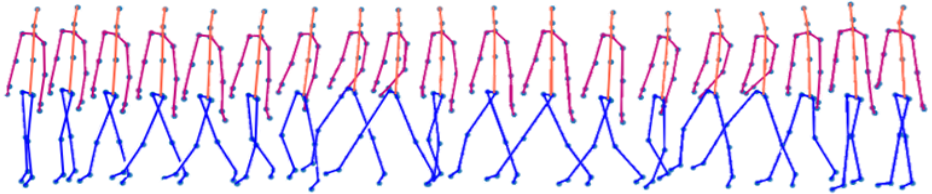

After capture, we filtered the data using a Double Exponential Filter, using the method described here.

To smooth the quaternion data, we filtered each component of the quaternion individually at each frame using the approach above. We then re-normalized the quaternion to have unit magnitude after the filtering process.

Data Format:

The format of the data is follows:

Every frame of data is specified by 20 consecutive lines of data (one for each joint).

Each line contains the name of the joint, the XYZ Camera Space Coordinates, followed by the XYZW Quaternion Vector, in that order.

We collected the following 20 joints:

|

Joint Name

|

|

Head

|

|

Shoulder-Center

|

|

Shoulder-Right

|

|

Shoulder-Left

|

|

Elbow-Right

|

|

Elbow-Left

|

|

Wrist-Right

|

|

Wrist-Left

|

|

Hand-Right

|

|

Hand-Left

|

|

Spine

|

|

Hip-Center

|

|

Hip-Right

|

|

Hip-Left

|

|

Knee-Right

|

|

Knee-Left

|

|

Ankle-Right

|

|

Ankle-Left

|

|

Foot-Right

|

|

Foot-Left

|

Downloads:

The dataset may be downloaded in multiple formats:

-

Filtered ZIP format

- Contains a filtered version of the dataset, where both joint positions and orientations have been filtered using a Double Exponential Filter

Acknowledgement:

If you perform research using our dataset or methods, please cite both our dataset and paper in your publication using the following template:

"We used the CU Denver Gait Dataset, obtained from URL here"

Please also cite our paper "ST-DeepGait: A Spatio-Temporal Deep Learning Approach for Human Gait Recognition": (Currently in review).